An SEO Guide to Launching New Pages

Creating new pages for websites is something that happens regularly, whether expanding existing content, adding new pages by introducing new services, launching new products or creating blog posts.

Adding new content to a site can help drive SEO performance but ensuring that both the content and technical aspects of SEO are considered is critical to generating improved results.

What should be tested when creating new pages?

We’ve worked on a lot of product and campaign launches over the years, covering all aspects of the content creation and launch.

It’s imperative that the more technical side of SEO is not neglected, otherwise there may be barriers to new pages being crawled and indexed. Failure to incorporate this into new content launch processes will result in poor results.

In this article we’ll be going through the main check-points for launching newly created pages, covering the following areas:

Indexing

Getting new content on Google

When creating new pages or updating existing ones, you ideally want them to be indexed and appear on Google as quickly as possible. If your site is well optimised from a technical SEO standpoint, Google (and other search engines) will find and index these pages given enough time.

However, consider occasions when retailers can all start selling a new product simultaneously, the latest iPhone for example. There is competitive advantage to be gained by having search engines indexing the new pages as quickly as possible. If your competitors are faster here, they will be able to outrank you for relevant searches, at least initially.

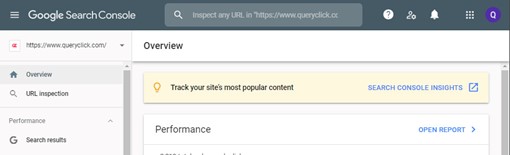

The main tool for informing Google of new pages, or that content has been updated on a page, is located within Google Search Console, where pages can be submitted to be indexed or re-indexed.

To do this, simply enter the desired URL into the “Inspect any URL…” search box located at the top of the page. This will then show if the URL is already included within Google’s Index and can be returned in search engine results.

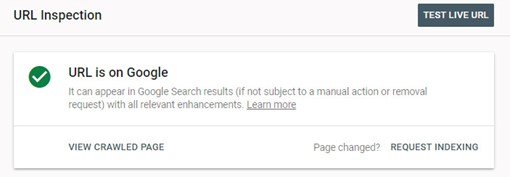

If the URL has already been indexed, a green tick will show alongside the following message, informing you that the page is on Google.

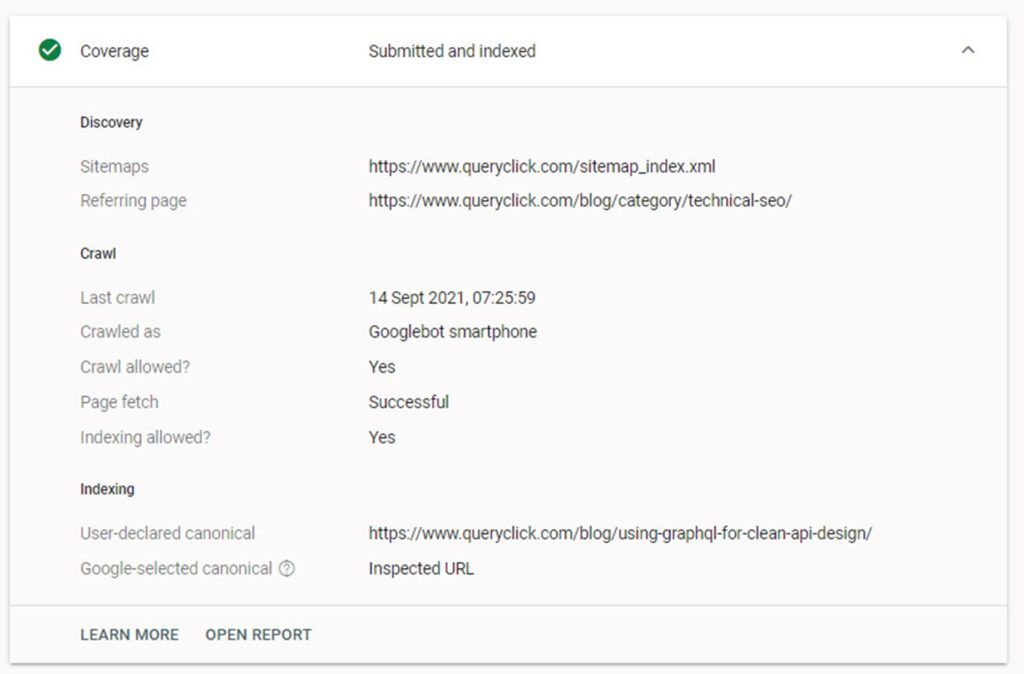

When this is the case and a URL has already been indexed, more information can be found under the ‘Coverage’ drop down, such as when the URL was last crawled, whether it is found in a Sitemap and other information.

This is important if the content on a page has been updated as part of a release, i.e. the product has gone from the ‘Pre-order’ phase to the ‘Buy-now’ phase. In this case, we need to check that the URL has been crawled since the content was updated. If it hasn’t then the URL needs to be re-submitted so that Google is aware the content has changed.

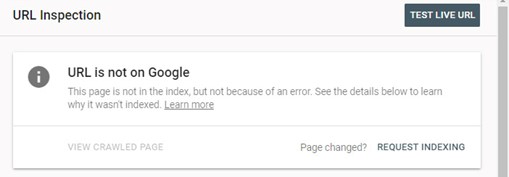

If a URL hasn’t been indexed, a different message will be shown, informing that the URL is not on Google, which is the most likely outcome with a brand-new page that has only recently gone live.

When this is the case, we advise testing the page via the ‘Test Live URL’ button. This will allow you to see if the page is indexable and can be returned in search engine results without submitting a request to index it.

Any issues will be flagged when testing the page and once resolved, the page can be submitted to Google by hitting the ‘Request Indexing’ link.

It is important that URLs are tested before submitting them to avoid wasting the submission allocation with non-indexable URL.

The ‘Request Indexing’ button submits the individual page to Google to be added to a list of URLs that they will crawl.

URL submission limits

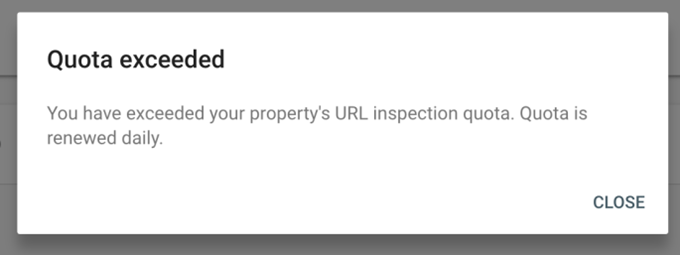

An important thing to remember is that Google has been reducing the number of URLs that can be submitted via the URL Inspection tool in GSC over the past few years.

Currently, only 10 individual URLs can be submitted per day.

Granted this isn’t ideal, as there may be a lot more pages that you wish to be crawled. For this reason, it is critical that only the most important URLs are submitted to be crawled/recrawled.

What to do if there are more than 10 pages to submit

When search engine bots crawl a site, they don’t tend to crawl just a single page and will often visit other URLs that are being linked to. They will also recrawl sites periodically, looking for updated content and new pages as Google wants to provide the most up-to-date, relevant search results.

There are a few things that can help get search engines to crawl any pages that you have not been able to individually request to be indexed.

- Ensure that links are pointing to these URLs from other pages.

- Ensure that the URLs are included within an XML Sitemap

- Resubmit an XML Sitemap in Google Search Console.

Crawlability

Ensuring that new pages do not negatively impact the crawlability of the site is important; specifically, it’s crucial that the number of indexable URLs that search engine bots will visit when they crawl the site is not reduced.

This is what is called Crawl Budget Wastage. It can result in it taking longer for search engines to find and then rank pages in search results.

Additionally, it can also result in the dilution of link equity passed between pages, reducing the potential of individual URLs to rank well.

When new pages are created it is important that all the outbound links are reviewed, as these should ideally link to URLs that return a 200 OK status and are indexable.

However, there can be exceptions to this rule, for example we may be linking to a page that requires a user to be logged in and therefore they encounter a temporary redirect to a login page or maybe it’s a link to a variation of a product that has been canonicalised to the main version.

Things like this need to be kept in mind when checking any outbound links.

Crawling a page’s outbound links

As part of crawling a page’s outbound links, the destination of the links must be scrutinised, particularly anything that doesn’t return a 200 OK status, which often includes:

- Redirects*

- Redirect chains

- Links to error pages

- Links to noindex URLs

- Links to canonicalised URLs*

*As mentioned previously, sometimes there can be exceptions to the rule.

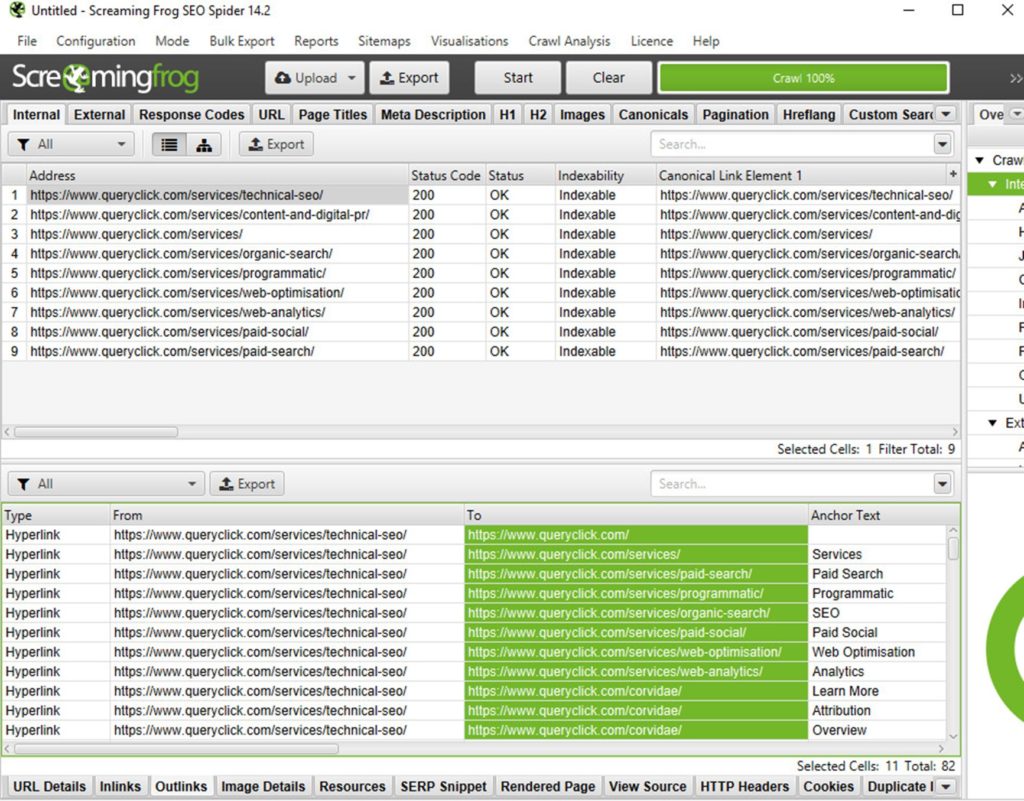

Lots of tools can be used to crawl outbound links and one of the most common is Screaming Frog. An effective way of analysing the outbound links is to run two Screaming Frog crawls; the first crawls any new pages on a website and the second crawls the copied list of any outbound links from new pages.

This makes it very quick and easy to run through the outbound links and identify those that may be problematic.

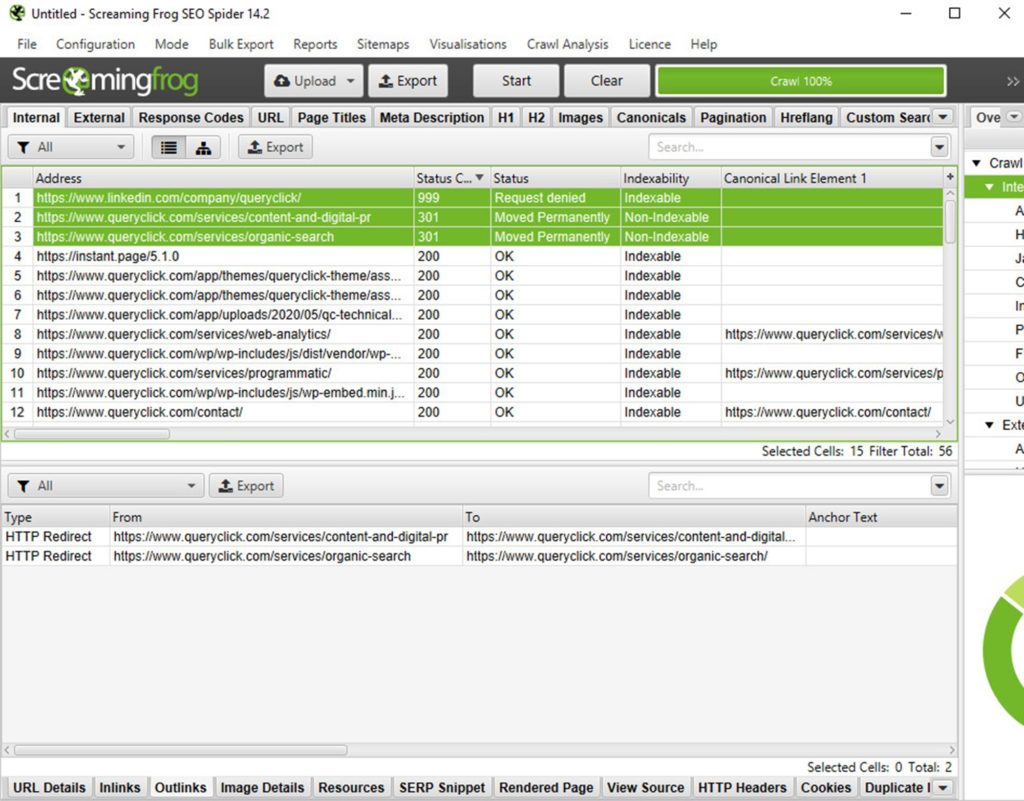

After crawling the initial URLs in Screaming Frog, you can select a single URL from the list that will then populate the bottom panel with further information about this URL. In the example below, we’ve highlighted https://www.queryclick.com/services/technical-seo/ and are then presented with all of the outbound links from that URL in the ‘Outlinks’ tab.

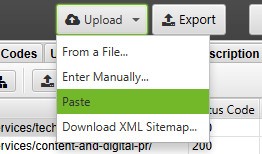

To crawl these URLs and ensure that they result in links to appropriate URLs, simply select and copy the entire list.

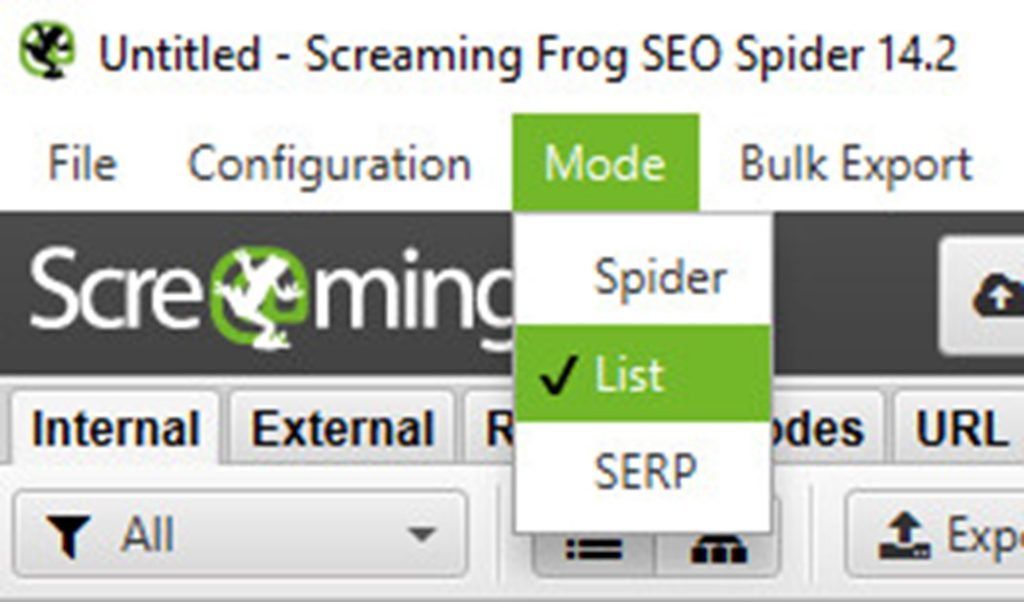

On the second instance of Screaming Frog, ensure that the Mode has been changed from Spider to List.

This will allow you to Upload and Paste the copied list of Outbound links to crawl them, which shouldn’t take more than a few seconds.

Having two instances of Screaming Frog open allows you to keep track of, and work through, a list of URLs in one and use the second instance to identify problematic links for each of the new pages that are being reviewed.

Once the list has been populated, it’s easy to identify any URLs that do not return a 200 Status, are not indexable or contain other issues. Crawled URLs can also be arranged by column in Screaming Frog, from Highest to Lowest, making it quick and easy to see which links return different Status Codes.

In the screen capture above there are 3 outbound links from our Technical SEO page that link to non-200 status URLs. One of these goes to LinkedIn, which can be ignored as it is an external site, and they are actively blocking our crawler.

The other two internal links which result in internal 301 redirects, this isn’t great and will negatively impact page loading times, user experience, crawl budget waste and dilute link equity!

It’s best to raise that with the relevant team.

Inclusion in an XML Sitemap

Another way of improving the crawlability of pages and ensuring that search engines can find them is to include them in the XML Sitemap.

An XML Sitemap is basically a blueprint of your website that is created to make it easier for Search Engine Bots to find important URLs.

This can normally be found on www.example.com/sitemap.xml. If the Sitemap (or a Sitemap Index file) doesn’t appear here, then check the robots.txt file (normally found at www.example.com/robots.txt) as this should reference the XML Sitemap location.

If it doesn’t and you are having difficulty finding it, that means search engine bots will also struggle to find the Sitemap and is something that should really be addressed.

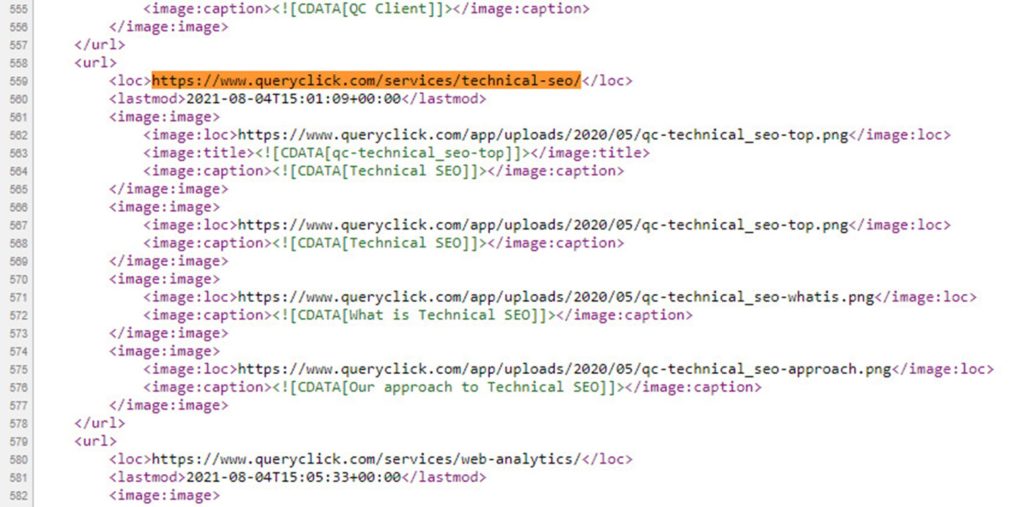

Once you track down the Sitemap location, you can manually check that page URLs are included within it by opening a find box (CTRL + F on a keyboard) and pasting the URL.

Ideally, most XML Sitemaps should be dynamic, which means the Sitemap automatically updates when new pages are added to a site.

Rendering

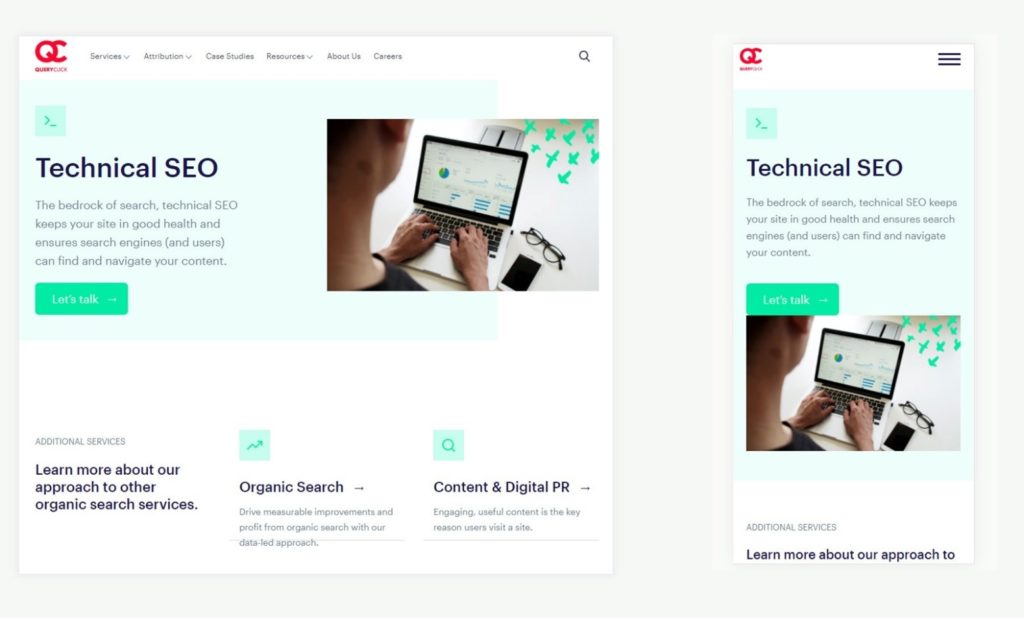

There are a few different areas to focus on with regards to rendering, although particular attention should be paid to content parity between mobile and desktop as well as JavaScript rendering.

Mobile vs desktop content parity

Although Google is now operating a mobile first approach, it still looks at the content that sites provide to desktop users and reviews the page experience.

With the desktop versions of pages, some elements will have much less of an impact on performance than they will on the mobile version of the page.

However, content parity is still important as ideally, sites should be providing the same information to users regardless of device.

Anything else is seen as a poor user experience and could impact performance for queries made on certain devices in the search results.

At this point it is also worth mentioning Google’s Mobile Friendly Testing Tool, which is a great resource for ensuring that there aren’t any elements on a page that will negatively impact the mobile usability – another ranking metric that Google scores pages on.

JavaScript rendering

Although Google makes quite a lot of claims that their bots can crawl JavaScript (and it’s true that they can), what they don’t often shout about is that it takes them a lot longer to crawl, understand and index any JavaScript related information on a page.

This is because bots that crawl JavaScript are a lot more resource heavy and therefore cost more to run than bots that crawl the HTML.

In SEO terms, this means that any JavaScript generated content on a page can take longer for search engines such as Google to index and rank it accordingly.

Ideally, no important information on the page should be JavaScript generated.

Instead, it should be included within the HTML of the page to ensure that search engine bots can easily find, index and rank it.

Testing JavaScript in a browser

There are quite a few different browser plugins available that allow you to turn off any JavaScript on a page. One such plugin is Quick JavaScript Switcher for Chrome which allows you to have a page open and easily toggle the JavaScript on or off to see what impact it has.

Some pages may change more than others but as long as the main textual content is still visible on the page with the JavaScript turned off then search engines will be able to easily find it.

The example below is from our Technical SEO page which shows that there isn’t much change and that the content is still visible when the JavaScript is turned off.

Page structure and content

When reviewing the page structure and content, it’s important that the content is optimised for target keywords, is on-brand and provides value for users. For that to be effective, you need to make sure you have the right elements on a page and arrange them effectively.

There should only be a single H1 tag

Yes, Google might say that H1 tags are not ‘critical’, and John Mueller has been quoted as saying “Your site is going to rank perfectly fine with no H1 tags or five H1 tags”.

However, it is still true that H1 tags still carry some importance and help both search engines and users better understand what the page is about.

There is an argument for having multiple H1 tags if you have an extremely long article, although the fact of the matter is that having multiple H1 tags muddy the water and make it less clear to search engines what your page is about.

So, stick to a single H1 tag for the best results.

Heading tags used appropriately

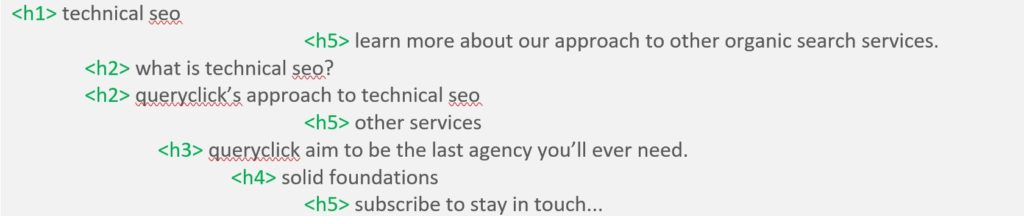

Heading tags help users and search engines better understand the content on the page. They should conform to a clear hierarchy; H1 > H2 > H3, etc. This improves readability, summarises each section of the page and helps search engines to determine the purpose of the content on the page.

As already mentioned, ideally there should only be a single H1 per page which is then followed by subheadings such as H2s and H3s, etc. This helps to prioritise the importance of the content included on the page.

H1s and H2s are intended as the primary headings and will have a small impact on rankings. Therefore, headings can also be used to contain important keywords where appropriate, although it is important that they remain relevant to the content.

H3 tags and other subheadings are not given as much importance from a raking perspective but are used to help structure the page content. It is acceptable to have some out of sequence heading tags, but they should still be structured logically, i.e. H1 > H3 > H5 > H6. Although, where possible they should retain some form of hierarchy.

Here’s a quick look at our heading hierarchy from the Technical SEO page:

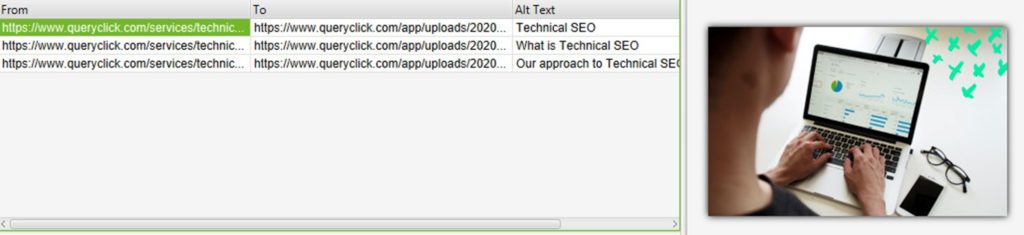

Checking image alt tags

Image alt tags are used to help inform users with screen readers and those that have images disabled on their browsers about the contents of image. These tags are also used by search engines to better understand images and can help build the overall relevance of page to relevant search queries.

Ideally, all images located within the main body content of a page should include image alt text. The alt text should describe the image and if it makes sense to do so, it should also include the page’s target keywords where appropriate.

These can be manually tested by inspecting images or by using different crawling tools.

Find out more about optimising images for SEO >

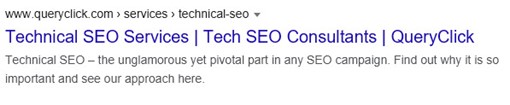

Ensuring Meta Tags and Page Titles are unique

Every page on a site should have a unique Page Title and Meta Description, both elements are the first thing that users are going to see in search engine results before clicking through to your page.

Therefore, it’s extremely important that they are well-written and include relevant keywords.

The Page Title is important for search engines as well as users. Search engines use them to understand the content and purpose of a page, so they can help you build relevance to target keywords. This relevance can have a positive impact on your rankings.

Ideally, a new page should have unique keyword targets and shouldn’t be targeting the same main keyword as any other pages on the site.

So, when creating a new page, a unique, optimised title is required that summarises the content of the page. If page titles are not unique and you are targeting the same keyword(s) with multiple pages, cannibalisation can occur and restrict your performance. You can find out more about keyword cannibalisation here.

Although the Meta Description doesn’t add anything directly from a ranking perspective, it can improve the click through rate as it is used more as a convincer. Therefore, it can be beneficial to contain a call to action within it.

Page Speed

Page loading speed has been an important ranking metric for Google for over 10 years. It is something that they are putting more and more importance on with the switch to mobile-first indexing.

When reviewing the page speed of a URL, any improvements that can be made are always going help improve the potential ranking performance. However, the overall website speed is likely to also have an impact on any potential rankings.

When testing the page speed performance of a new page, the goal is to make sure that it is either in-line with or better than most other similar pages. It’s crucial to ensure there’s nothing that is drastically slowing down the page and causing it to perform worse than other similar pages.

Specific page elements such as banners, pop ups, and page specific JavaScript and CSS files can all negatively impact the page loading speed when a new page is launched.

There are a lot of available tools online that can be used to test the page speed performance, the first of which should always be Google’s Page Speed Insights. This is a good starting point for testing how well the pages load and provides plenty of recommendations for further improvements.

Looking for the best page speed tool? Check out out top picks >

Summary

It’s inevitable that your website will evolve with new pages being added regularly.

To achieve maximum performance from any new pages it’s crucial your SEO approach goes beyond keyword optimisation of the content and technical factors are considered.

This requires ensuring your pages can be crawled and indexed. It’s also key that pages do not negatively impact the overall crawlability of a site.

Rendering is another key driver of performance for new pages and close attention should be paid to mobile and desktop parity as well as the use of JavaScript to render key information.

Finally, each new page should load as quickly as possible while containing all the structural elements that are used by search engines and users to understand relevance to a particular topic.

If you want to chat to us regarding your new product or content launches, contact us here.

Own your marketing data & simplify your tech stack.

Have you read?

Generative AI is transforming the way that marketers plan and assemble content for their Paid Ads. As big platforms like Google, Meta and TikTok increasingly build the tools needed to...

In a surprising move that has sparked heated debate, Mark Zuckerberg announced on his Instagram that Meta will be reducing its levels of censorship and in particular fact-checking on its...

It is no understatement to say that the impact of AI in marketing is huge right now. Here we take a look at some of the top uses cases that...